What is technical SEO in digital marketing? is that process of optimizing the infrastructure of a website to further its performance and visibility in the search engines. It covers all those aspects related to a website such as site speed, mobile-friendliness, indexing and crawlability, and structured data, etc. It helps businesses grow their online presence; more organic traffic leads to good conversion rates. In this article, we are going to explore technical SEO techniques to understand how they work and why they are inevitable to reach the level of success in digital marketing.

Understanding the Fundamentals of Technical SEO

Before diving into technical SEO methods, one should first understand the basic principles that are built on those methods. A technical SEO is actually the backbone of optimization techniques applied both on and off the page. By getting your website to comply with the rules of search engines, you establish a working environment within which the search engine can properly index and rank your web pages.

The Importance of Website Structure

“Crafting a seamless website structure is the foundation for user engagement—guiding visitors effortlessly from curiosity to conversion.”

A well-structured website enhances both user experience and search engine crawling. Organizing content logically using categories and subcategories allows users to navigate your site effortlessly.

Properly structuring your website includes creating a clear hierarchy of content, utilizing breadcrumbs, and implementing a sitemap. These elements facilitate easy access to information for both users and search engines. An intuitive structure also decreases bounce rates, as users are more likely to engage with your content if they can find what they’re looking for quickly.

Additionally, a well-organized site helps search engines understand the relationships between different pages, allowing for better indexing. A coherent hierarchy ensures that your important pages receive the attention they deserve during crawling and ranking processes.

Creating an effective website structure is essential for user experience and search engine optimization. A well-organized site helps visitors find the information they need quickly and easily. Below is a table illustrating a common example of a website structure, including various elements and their hierarchical relationships.

| Level | URL Structure | Page Name | Description |

|---|---|---|---|

| 1 | www.example.com | Home | The main landing page of the website. |

| 2 | www.example.com/about | About Us | Information about the organization or individual. |

| 3 | www.example.com/services | Services | Overview of services offered. |

| 4 | www.example.com/blog | Blog | A section for articles, news, or updates. |

| 5 | www.example.com/contact | Contact Us | Page with contact information (form, address, phone). |

| 6 | www.example.com/services/web-design | Web Design | Details about web design services offered. |

| 7 | www.example.com/services/seo | SEO Services | Information on search engine optimization services. |

| 8 | www.example.com/blog/category1 | Category 1 | A specific blog category. |

| 9 | www.example.com/blog/category1/post1 | Blog Post Title 1 | Individual blog post under Category 1. |

| 10 | www.example.com/blog/category1/post2 | Blog Post Title 2 | Another individual blog post under Category 1. |

| 11 | www.example.com/faq | FAQ | Frequently asked questions on common topics. |

| 12 | www.example.com/testimonials | Testimonials | Customer reviews and testimonials about services. |

Explanation:

- Level: Indicates the hierarchy of pages. Level 1 is the homepage, Level 2 includes major sections, and so on.

- URL Structure: Shows how the URLs are organized based on the website’s pathways.

- Page Name: Descriptive name of each page as displayed to users.

- Description: Brief explanation of the page’s content and purpose.

This structure ensures that visitors can navigate through the website efficiently while helping search engines to understand the site’s organization, leading to better indexing and potentially higher rankings in search results.

URL Optimization Strategies

Certainly! URL optimization is an essential aspect of search engine optimization (SEO) that influences how search engines crawl and index your web pages. A well-optimized URL can improve user experience, enhance click-through rates, and boost organic rankings. Here is a table summarizing key URL optimization strategies:

| Strategy | Description | Benefits |

|---|---|---|

| Use Descriptive Keywords | Include relevant keywords in the URL to provide context about the page content. | Improves search engine visibility and relevance. |

| Keep URLs Short and Simple | Limit URL length and avoid unnecessary parameters to make it easier for users to read and remember. | Enhances usability and shareability. |

| Hyphenate Words | Use hyphens (-) to separate words instead of underscores (_) or other characters. | Improves readability and clarity for search engines. |

| Avoid Special Characters | Refrain from using special characters (like , %, $) in URLs as they can cause issues during crawling. | Reduces error chances and enhances SEO efficiency. |

| Use Lowercase Letters | Stick to lowercase letters in URLs since search engines treat uppercase and lowercase as different URLs. | Prevents duplicate content issues. |

| Create a Logical Structure | Organize URLs in a hierarchical structure that reflects the site’s content architecture (e.g., www.example.com/category/subcategory). | Assists in better navigation for users and search engines. |

| Include Relevant Categories | Use categories if applicable (e.g., product types) to help segment content clearly and improve topical relevance. | Enhances contextual relevance and improves indexation. |

| Utilize Canonical Tags | When similar content exists at multiple URLs, use canonical tags to indicate the preferred version to search engines. | Helps prevent duplicate content penalties. |

| Add Target Audience Context | Consider who the target audience is and tailor the URL to reflect their language and interests. | Increases engagement by aligning with user intent. |

| Avoid Keyword Stuffing | Don’t overuse keywords; keep it natural and user-friendly to avoid penalties from search engines. | Ensures compliance with SEO best practices while avoiding spammy perceptions. |

These strategies collectively contribute to creating a clean, informative, and user-friendly URL structure that can significantly impact SEO performance and overall website usability.

I do believe that optimizing URLs is another essential technical SEO feature that in itself adds readability and speaks relevance to search engines. Clean descriptive URLs do enhance readability but also tell the search engine that it’s relevant. Use short URLs full of keywords. These may depict the content of a specific page.

Keyword presence in your URLs will have a direct impact on rankings, because various search engines detect keywords whenever they scan through your URLs. This will help them find out what the content is referring to, and thus ranking will be well done. It even improves the presence of a search engine, as users are likely to click on a link that contains clear details on what a page presents.

There’s also the issue of consistency with your URL structure. If you are a site that tends to make changes to your URLs regularly, this is going to throw all of that built-up authority down the drain and send visitors to dead links or 404 pages-thereby getting a bad user experience.

Mobile Responsiveness and Its Impact

Mobile responsiveness is one thing that is inevitable in today’s digital market; and with most internet access coming from mobile phones these days, Google favors ranking positions for mobile-friendly sites over others.

Make sure your website accommodates well in being responsive through flexible layouts, scalable images, and CSS media queries that change the content depending on whether it is being viewed on a PC, a phone, or a tablet. Responsive design not only helps optimize the experience of the user but will also bounce visitors out less because they will linger longer if they can easily look around your site on their devices.

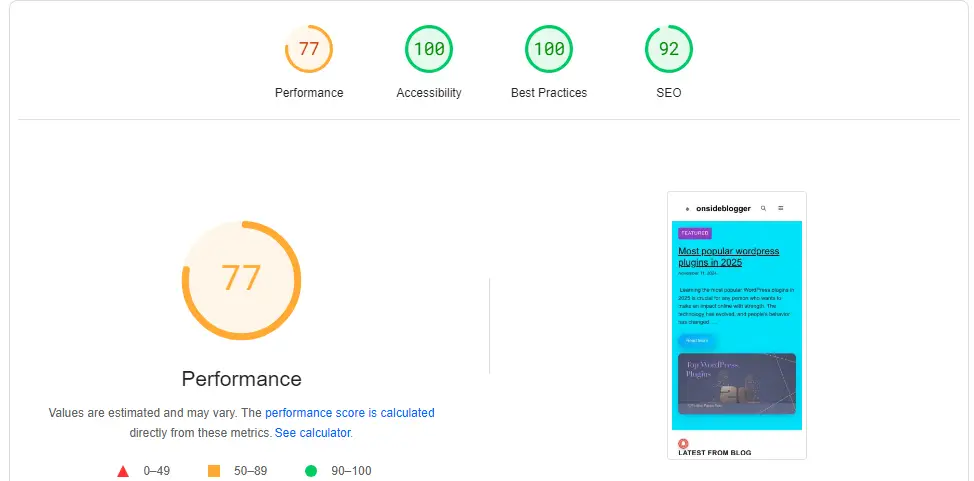

And don’t forget to consider the loading speed on mobile devices. May be fine in a desktop, but slow on mobile, so serious testing on all kinds of devices is necessary. The Google PageSpeed Insights tool will help you know about mobile performance and guide you toward your necessary optimizations.

Enhancing Website Speed for Optimal Performance

Technical SEO aspect, one of those that significantly impacts user experience and SERPs scores, is website speed. A slow loading can really drive away users and lead to low engagement, increased bounce rates, and lower conversion rates.

Analyzing Page Load Times

To create a table of page load times, we first need to understand what page load time is. Page load time refers to the amount of time it takes for a web page to fully load and become interactive when accessed by a user. This metric is crucial because it can significantly affect user experience, search engine rankings, and conversion rates.

Here’s a structured approach to create an example table of page load times:

Example Table: Page Load Times

| Website | Average Load Time (Seconds) | Type of Content | Optimization Techniques Used |

|---|---|---|---|

| Example.com | 2.5 | E-commerce | Image compression, minimized CSS/JS |

| NewsSite.org | 3.0 | News articles | Lazy loading, CDN usage |

| BlogExample.net | 1.8 | Personal blog | Caching, optimized images |

| TravelSite.com | 4.5 | Travel booking | Asynchronous loading, server optimization |

| PortfolioPage.io | 2.0 | Portfolio | Responsive design, reduced redirects |

Explanation of Table Columns:

- Website: The name or URL of the website being analyzed.

- Average Load Time (Seconds): The average time it takes for the web page to load completely, measured in seconds.

- Type of Content: The nature of the content available on the site (e.g., e-commerce, news articles, personal blog).

- Optimization Techniques Used: Methods and strategies implemented by the website to improve load times, such as image compression, caching, using Content Delivery Networks (CDN), lazy loading, etc.

Why This Table is Useful

- Comparative Analysis: It provides a quick overview of how different types of websites perform in terms of load time, allowing stakeholders to identify trends or areas needing improvement.

- Performance Benchmarking: Webmasters can benchmark their site’s performance against competitors or industry standards.

- Identifying Optimization Needs: By listing the optimization techniques used, the table highlights best practices that can be adopted by others to enhance their site’s performance.

Considerations

When creating a table like this, it’s important to use actual metrics sourced from reliable tools (like Google PageSpeed Insights, GTmetrix, or WebPageTest) to ensure accuracy. Tools often provide additional insights about what factors contribute to load times, which can further inform optimization strategies.

Optimization of website speed begins with checks of the current loads using tools such as GTmetrix or Pingdom. The portals provide accuracy in reporting these areas that would seem to be slowing their performance-such as unoptimized images or too much HTTP requests.

Once you encounter the insights, aim at the area that should be worked on. For instance, compress large image files and employ browser caching to speed up the loading times of the site. Another trick is a Content Delivery Network, or CDN. With this tool, content of your website will reach your users faster whatever their place in the world is.

To optimize well, keep track of your site’s speed on a regular basis. Ongoing optimizations can lead to sustainable improvements, but you will only know you are on the right path if you are tracking various benchmarks and goals related to load time as well as continually refining your strategy through performance metrics.

The Role of Image Optimization

Images make up a large part of the total page load, and optimizing them can make quite a big difference in improving the loading time of your page. So start by choosing the correct file formats: JPEG for photographs, PNG when you need transparency for some graphics, and SVG for your logos or icons.

In addition, compress your images without any quality loss. More online tools and plugins can be used to make this process smoother as file sizes are reduced without losing anything visually appealing. Finally, use descriptive filenames and alt text on images, allowing search engines to understand what is there on an image and enabling accessibility to visually impaired users.

The images lazy load, which are loaded when they come into view. This does improve performance on the first page load and provides a better experience for users.

Minimizing HTTP Requests

Every single element on your page including the scripts and stylesheets and images, requires an HTTP request to load. Therefore, more requests can make your page slower. To solve this issue, first reduce the counts of files wherever possible. You could begin by reducing the number of requests during loading to complete by merging files together. For example, you put all your multiple files of CSS and JavaScript into one file which reduces the overall count of requests.

Remove the unused plugins or widgets, which are only there to serve no purpose and add on more requests. It is useful from the functionality point of view but creates a lot of performance issues. Thus, constant audits must be done to know what will benefit your website and eliminate what has no good effect.

Ensuring Proper Indexing and Crawlability

For search engines to effectively display your web pages in search results, they must first be able to index them. Ensuring proper indexing and crawlability involves several strategies that enhance your site’s visibility.

Creating and Submitting a Sitemap

An example of an XML sitemap for search engines:

https://www.example.com/

2023-10-01

weekly

1.0

https://www.example.com/about

2023-09-25

monthly

0.8

https://www.example.com/contact

2023-09-20

yearly

0.5

This XML sitemap lists three pages of a website, with information about their last modified date, change frequency, and priority.

A sitemap is essentially a map of your website; it guides search engines to navigate through your content. How great it would be if the search engines could crawl faster and ensure that all relevant pages are indexed. This can be achieved by submitting the XML sitemap to the search engines.

Your sitemap should include all the important pages on your site, updates, and metadata. Submission of sitemaps is always easy with the tools like Google Search Console, and you will also be able to track status on how well your site is indexed. Your sitemap also ought to periodically reflect changes such as newly added pages or removed content for search engines to be aware of the latest structure on your site.

Utilizing Robots.txt Files

Here’s a shortened example of a robots.txt file:

User-agent: *

Disallow: /private/

Allow: /public/This example tells all web crawlers not to access the /private/ directory but allows them to access the /public/ directory.

Robots.txt is a file that instructs search engines on which pages they can crawl and index. Using this tool wisely allows you to control access to certain parts of your site, preventing search engines from wasting resources on irrelevant pages.

Ensure that your robots.txt file is correctly configured; improper settings may inadvertently block valuable content from being indexed. Utilize Google Search Console’s robots.txt Tester feature to verify the correctness of your setup.

Implementing Structured Data Markup

The structured data markup enhances the way a search engine reads your content. Adding schema markup to web pages allows your site to add more context to content so search engines can serve richer search results, such as featured snippets or knowledge panels.

One of the great advantages of using structured data is that listings are likely to generate better click-through rates, simply because people tend to get attracted to visually enhanced listings. To test the implementation and see whether everything is aligned with the latest best practices, you can use tools like Google’s Structured Data Testing Tool.

Monitoring and Fixing Crawl Errors

Regularly monitoring crawl errors is essential to maintaining a healthy website. Utilize Google Search Console to identify and rectify issues, such as broken links or server errors.

Addressing these errors promptly prevents them from negatively impacting user experience and search engine rankings. Make it a habit to conduct periodic audits of your website’s health to stay ahead of potential issues.

Leveraging Secure Connections with HTTPS

Security is a critical component of technical SEO that has gained considerable importance in recent years. Implementing HTTPS not only protects user data but also positively impacts search engine rankings.

Understanding the Significance of HTTPS

This secures data being exchanged between users and websites, hence making it private and secure. Search engines, most notably Google, prefer secure sites in their algorithms; therefore, not having HTTPS on your site may considerably harm the search visibility of your web page.

In order to start the process of switching to HTTPS, one needs to obtain an SSL certificate from a well-known provider. Install it and be sure that all of your site’s pages can now be accessed using the secure protocol. Don’t forget to redirect HTTP traffic to HTTPS because you should avoid serving duplicate content.

The Implications for User Trust and Engagement

Users are increasingly wary of unsecured sites, and displaying a “Not Secure” warning can deter visitors. By implementing HTTPS, you signal to users that their data is safe, fostering trust and encouraging engagement with your brand.

Consider integrating trust badges and security seals throughout your site to further reassure users about your commitment to security. This approach can enhance credibility and lead to higher conversion rates as customers feel more comfortable sharing personal information.

Addressing Mixed Content Issues

After adding HTTPS, finding mixed content-related issues is of high importance. Mixed content occurs when a number of the resources on a secure page are served over an insecure connection, thereby undoing all the security precautions that have been taken.

Here is a checklist of measures to undertake and ensure that your website does not have mixed content warnings.

Verify your website for mixed content warnings.

Eliminate all such problems by confirming that all the resources, be it images, scripts, and stylesheets, should be served over HTTPS.

FAQ’s

What is technical SEO?

Technical SEO refers to the process of optimizing a website and server to help search engine spiders crawl and index your site more effectively. It involves improving elements such as site speed, mobile-friendliness, site architecture, and security.

Why is technical SEO important?

Technical SEO is crucial because it helps ensure that search engines can successfully crawl and index your website. If search engines struggle to access your site, it will negatively impact your rankings in search results, ultimately leading to lower visibility and traffic.

How does technical SEO differ from traditional SEO?

While traditional SEO focuses on content optimization (keywords, meta tags, backlinks), technical SEO emphasizes the behind-the-scenes factors that influence how search engines interact with your website. This includes site performance, usability, and indexing issues.

What are some key components of technical SEO?

Key components of technical SEO include website speed optimization, mobile responsiveness, secure HTTPS protocol, XML sitemaps, structured data markup, canonical tags, and proper use of robots.txt files.

Can I handle technical SEO on my own?

Depending on your skill level, you may be able to manage some aspects of technical SEO yourself using various tools and resources available online. However, for more complex issues, hiring a professional or SEO agency may be beneficial to achieve optimal results.

How does technical SEO impact website performance?

Good technical SEO can significantly improve website performance by enhancing loading speeds, ensuring a smooth user experience, and reducing bounce rates. All these factors contribute to better engagement and higher search rankings.

Are there specific tools for auditing technical SEO?

Yes, there are several tools available for auditing technical SEO, such as Google Search Console, SEMrush, Ahrefs, Screaming Frog, and Moz. These tools help analyze and identify areas needing improvement on your website.

How often should I conduct a technical SEO audit?

It’s recommended to conduct a technical SEO audit at least annually, or whenever significant changes are made to your website. Regular audits help identify and resolve issues before they affect your site’s performance and rankings.

Conclusion

In the world of digital marketing, there must be technical SEO optimization that would make the website appear better and perform well, most importantly, make it better for users. With this, knowing technical SEO and the implementation of strategies regarding the structure of the website, how to work on the speed, indexing, and security, a person could build his business on a firm foundation.

As search engines evolve, keeping up with the latest technical SEO trends and best practices will help marketers compete and prosper within this dynamic digital landscape. Technical SEO is not a matter of checking the boxes; it is about immersing yourself within a truly holistic approach to online success that will enhance greater brand recognition, user satisfaction, and conversion rates.